KB - SIEMonster - Legacy Archive Restore Guide 1.0.0

SIEMonster Legacy Archive Restore

The following has been created to assist with the restoration of legacy archive logs from AWS S3 object storage. This guide is limited to the restore process only, for any additional information, please consult the SIEMonster documentation.

Introduction

This guide is provided as a supplement to the standard Elasticsearch restore method in order to provide a process to restore archived Wazuh Alert logs respecting the existing ETL structure.

This method should be used for S3 archive methods prior to the OpenSearch Snapshot method.

Prerequisites

The following actions need to be performed only once to configure the environment for ongoing restore activities.

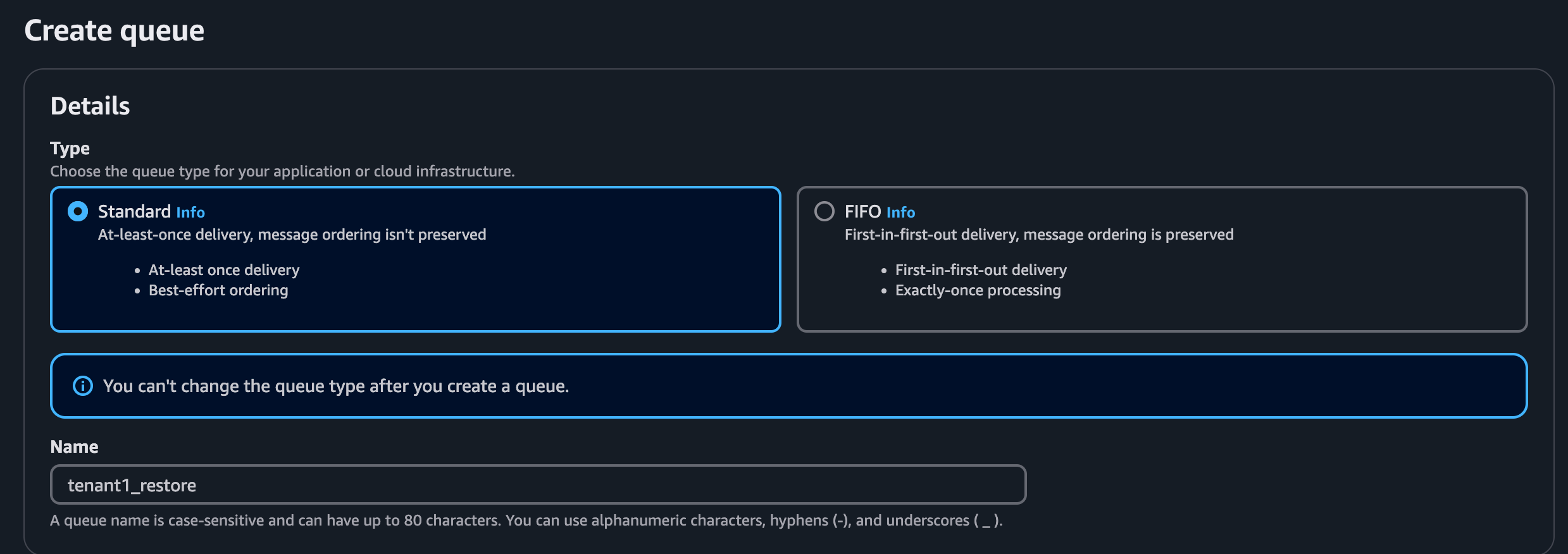

Configure an SQS Queue for each tenant.

Example:

Replace ‘tenant1’ with appropriate tenant.

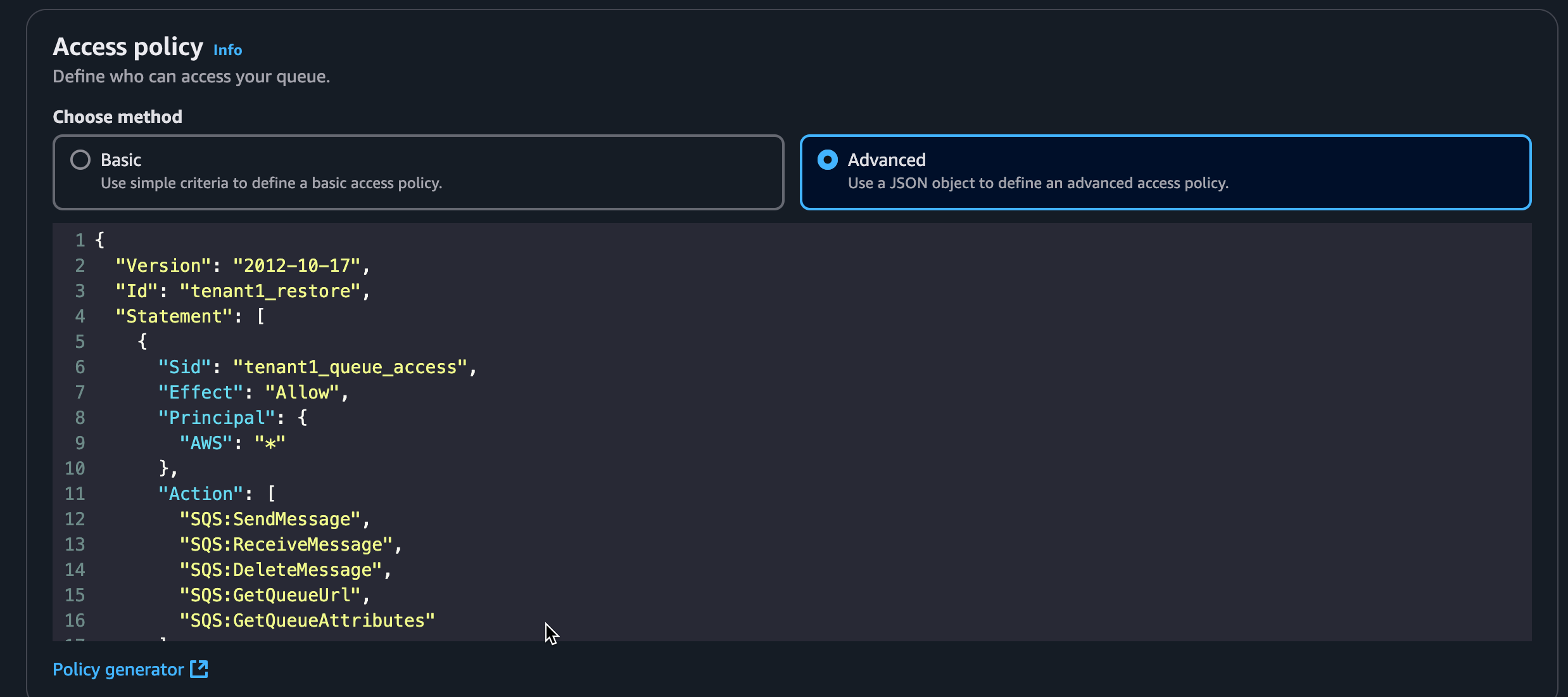

Regarding access policy, used the advanced option and use the following template:

https://gitlab.com/-/snippets/4792359

Replace Id with relevant tenant and Resource with SQS Queue ARN

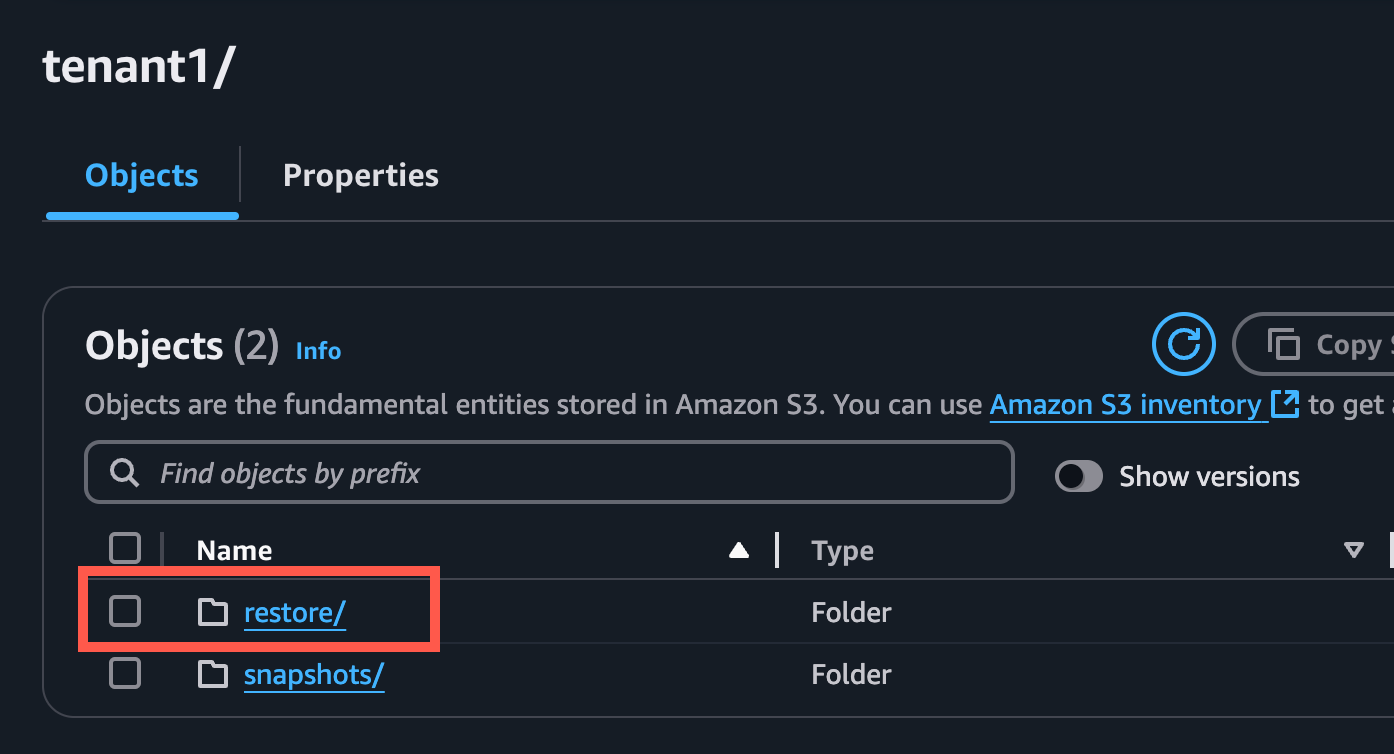

Create a restore folder within the tenant folder of the S3 Bucket:

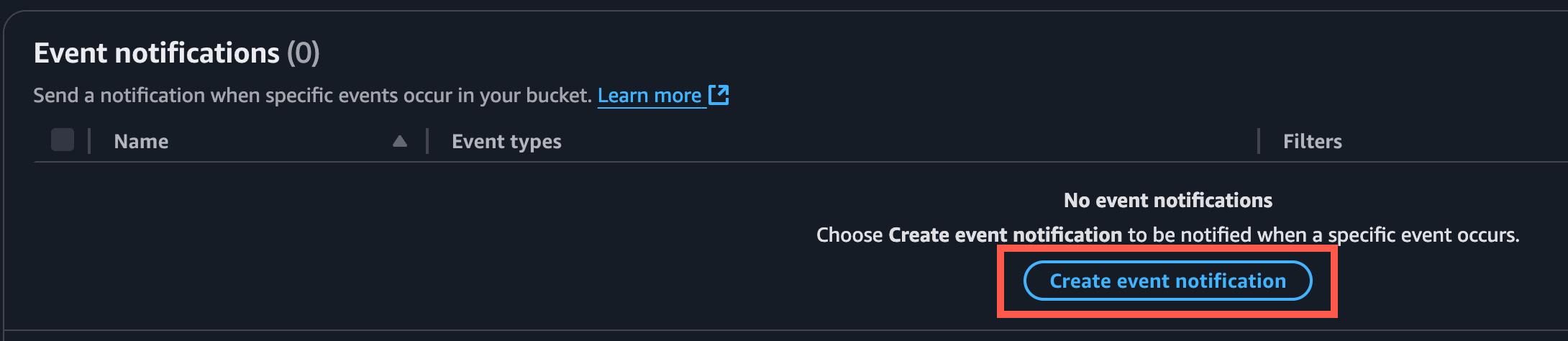

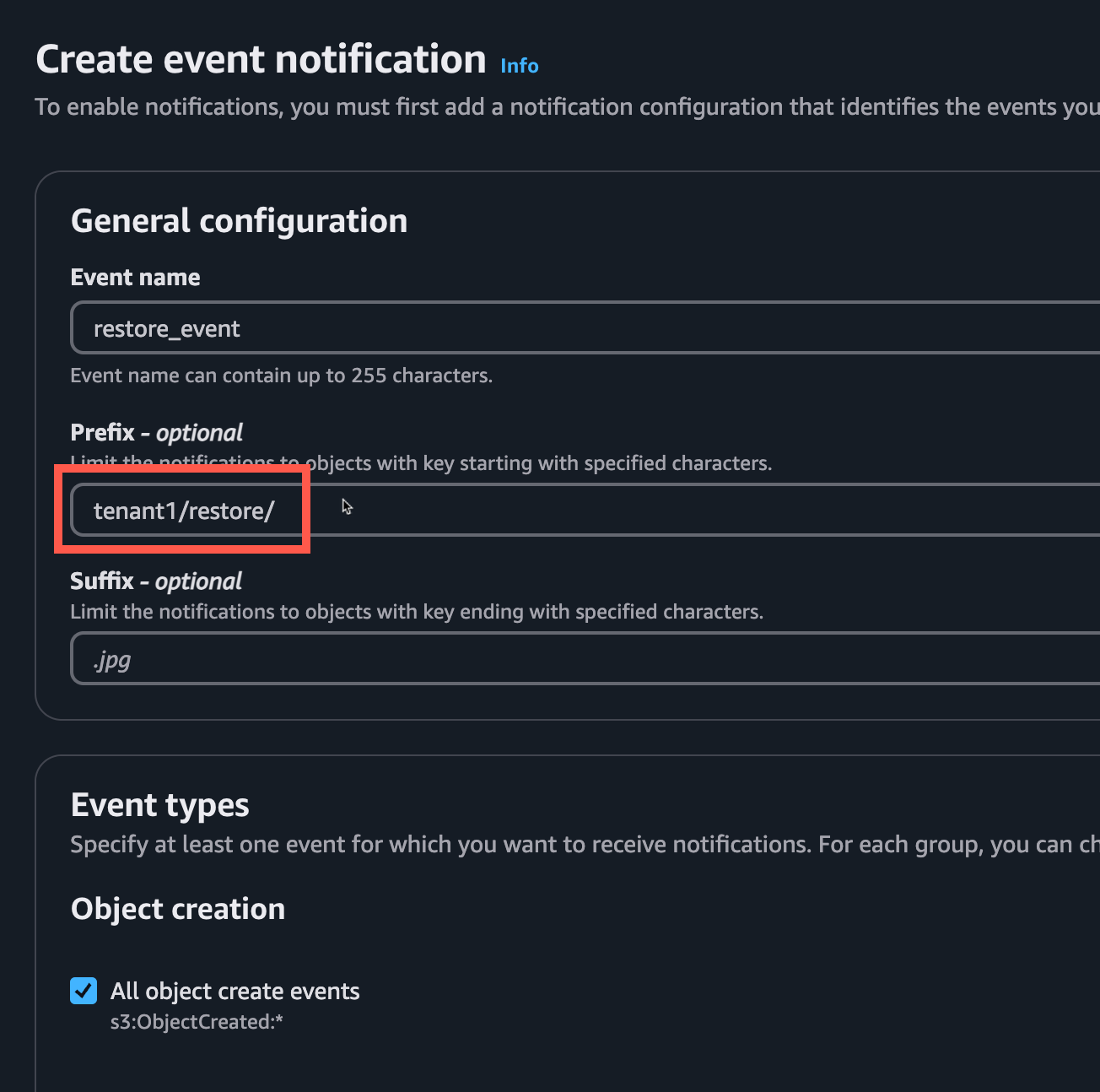

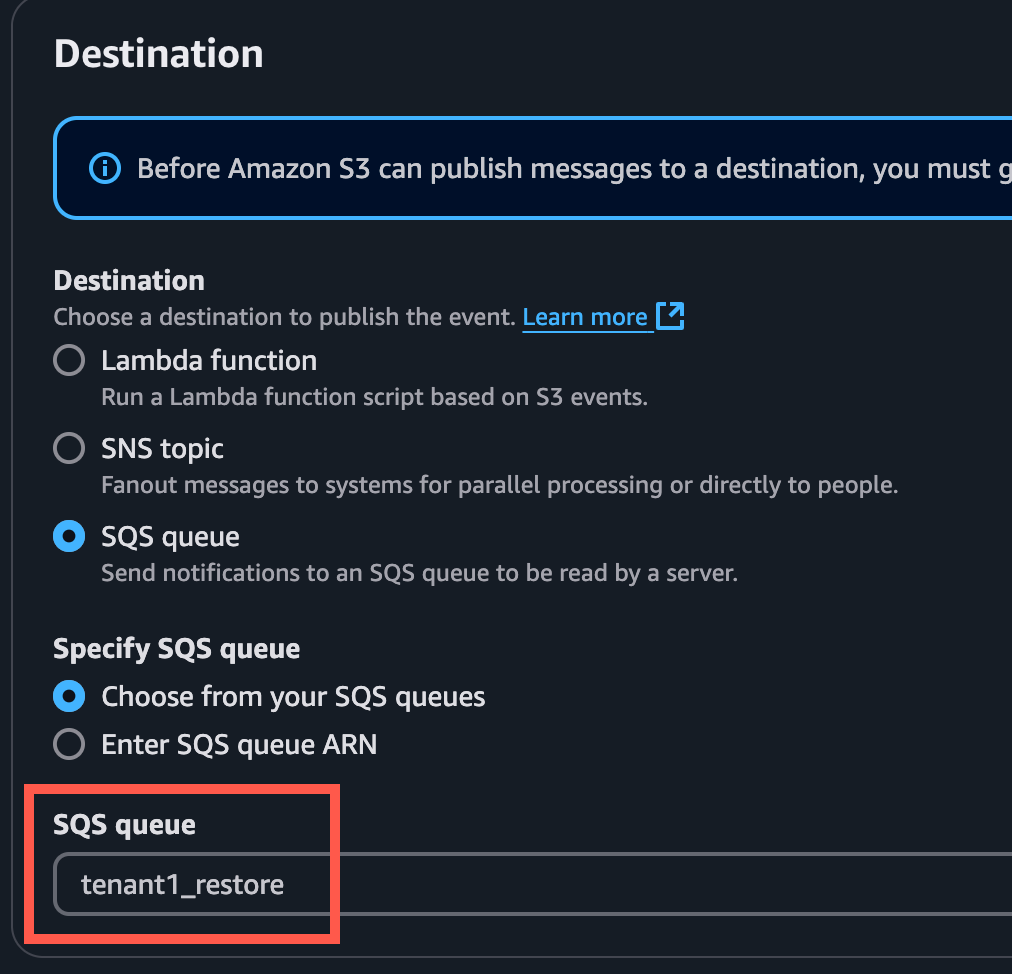

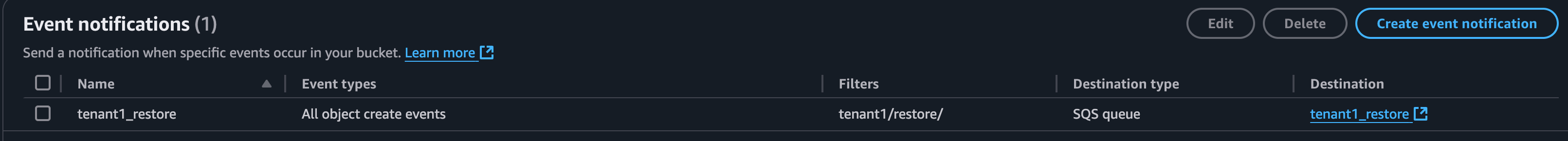

Within the S3 Bucket properties, create a new Event Notification to send to the SQS Queue:

Be sure to include the trailing forward slash for the Prefix, e.g. tenant1/restore/

Check the ‘All object create events’ box.

Choose the SQS Queue created in the previous step.

Add SQS Pipeline source to the Vector configuration:

Edit the Vector configuration file (/vector-data-dir/aggregator.yaml) after first making a backup copy. Add an SQS Queue source pipeline using the following template:

https://gitlab.com/-/snippets/4792362

Replace the SQS ARN with the configured SQS Queue ARN.

Example:

sources:

restore:

type: "aws_s3"

framing:

method: newline_delimited

region: eu-central-1

compression: none

sqs:

queue_url: "https://sqs.eu-central-1.amazonaws.com/123456789123/tenant1_restore"

Add the new source pipeline to the wazuh_parser transform:

transforms:

wazuh_parser:

type: remap

drop_on_error: true

reroute_dropped: true

inputs: ["kafka_pipeline", "fluent", “restore"]

source: |

. = parse_json!(string!(.message))

del(.@metadata)

.@timestamp = (.timestamp)

.tmp_date = parse_timestamp!(.timestamp, format: "%+")

.event_date = format_timestamp!(.tmp_date, format: "%Y.%m.%d")

del(.tmp_date)

Restart the Vector Pod by deleting or scaling STS.

Usage

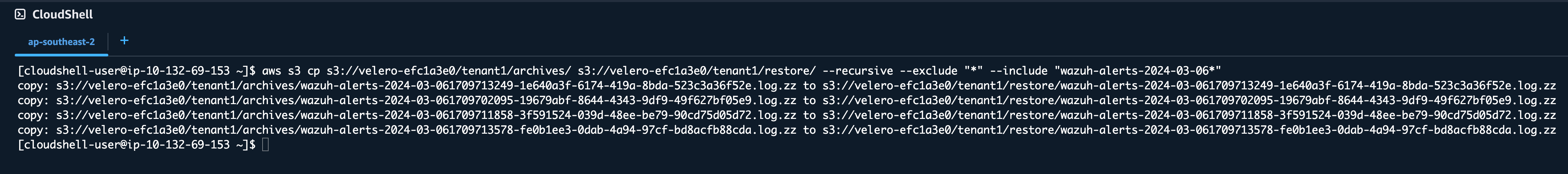

The simplest restore method is to use Cloudshell, determine the required dates for Wazuh archive restoration and the use the aws s3 cp method.

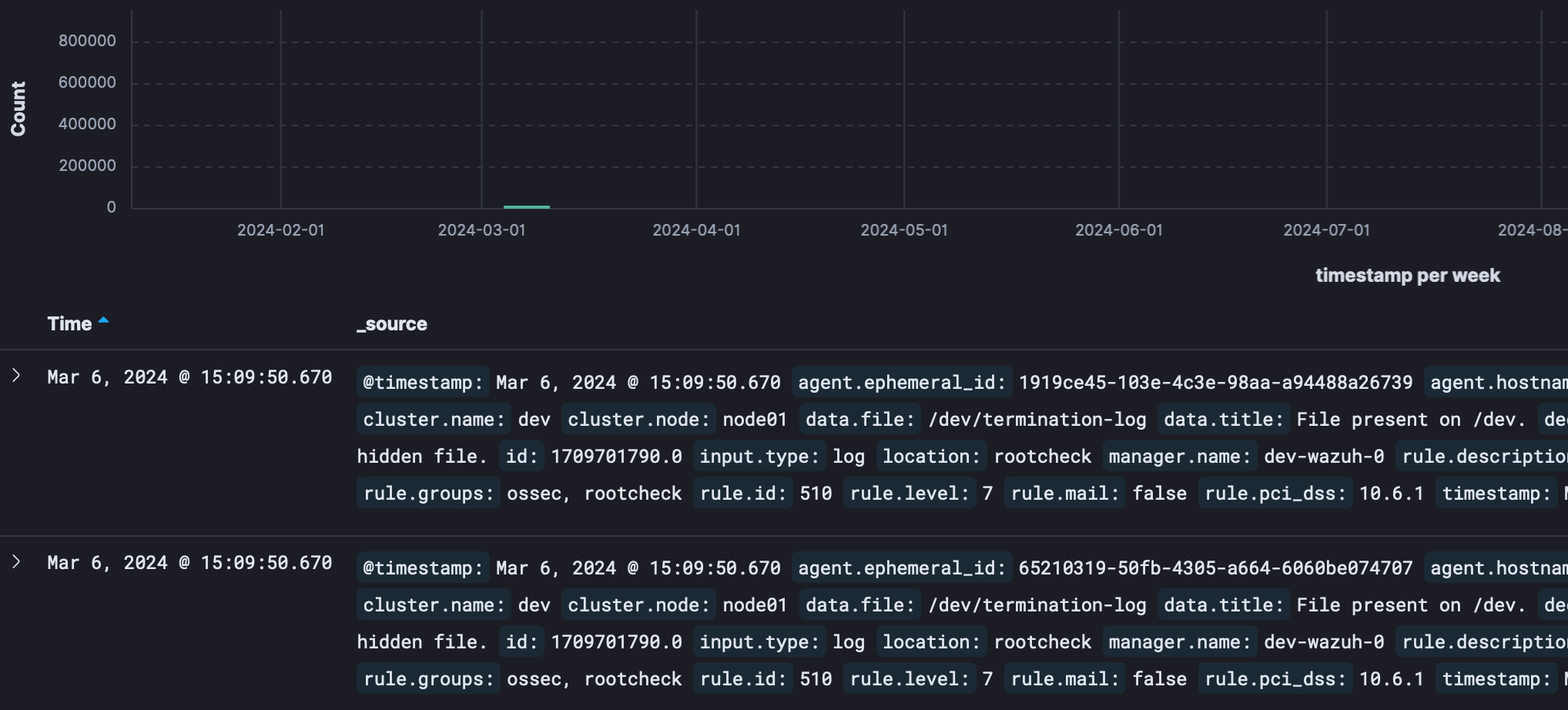

Example – restoring logs from March 6 2024:

Assuming archived Wazuh alert logs are stored in ‘tenant1/archives/’, copy files using the Prefix option to the ‘tenant1/restore/’ folder:

aws s3 cp s3://velero-efc1a3e0/tenant1/archives/ s3://velero-efc1a3e0/tenant1/restore/ --recursive --exclude "*" --include "wazuh-alerts-2024-03-06*"

After a short time the restored events will be visible in the Event Discovery pane: